In the first part of this series of posts I told you about computer vision, about its history and some of the problems we face in this particular field. Now I’d like to introduce you to the fascinating world of deep learning. I’ll start by explaining what deep learning really is and why it’s called that, then I’ll present with you a brief summary of the history of deep learning, and towards the end of the article you’ll find out how it interrelates with computer vision.

What is deep learning?

Inventors, writers and all sorts of creative people have always dreamed about thinking machines. This desire eventually led to the emergence of the artificial intelligence (AI) field of research, which was rapidly able to tackle problems which were hard for humans to solve. Further down the line, researchers realized that the true challenge for AI was to solve problems which appeared simple and even automatic to human beings, for instance, the recognition of an object in an image.

Deep learning is all about solving problems that are easy for humans but hard for machines. Seemingly simple brain activities that we take for granted—recognizing a sweater as a sweater and not a cardigan, for example—involve so many variables and experiences that it’s a complex process to make a machine do the same, producing the same results. So, how does deep learning do this? Ian Goodfellow, Yoshua Bengio, and Aaron Courville answer this question in their book titled DEEP LEARNING. They state that “This solution (deep learning) is to allow computers to learn from experience and understand the world in terms of a hierarchy of concepts, with each concept defined through its relation to simpler concepts. The hierarchy of concepts enables the computer to learn complicated concepts by building them out of simpler ones”. In simpler terms, deep learning is an approach to find automatic solutions to problems that are intuitive to human beings. This is achieved by creating algorithms which are presented knowledge (gathered from experience) so that they can actually learn a correct hierarchy of concepts.

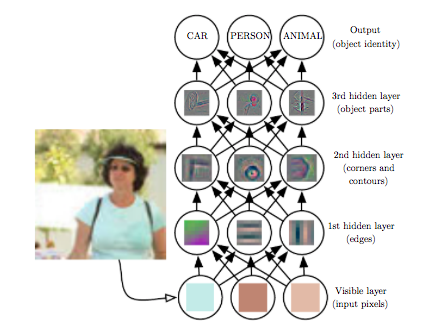

This is an example of a hierarchy of concepts for a deep learning model. The simplest of concepts are colors, followed by “low-level” features, such as edges. The most complex concepts are the abstract ones, in this case, the concepts are car, person, and animal. Image from Yoshua Bengio book Deep learning

Okay, so now it is clear what deep learning is and what it does but, why is it even called “deep learning”? What does “deep” refer to? To understand this, we have to go back to the hierarchy of concepts. As we saw before, deep learning algorithms solve problems by learning a hierarchy of concepts, so that later they can learn complicated concepts from simpler ones. But what happens when the simpler concepts are not so simple? Well, then another layer of even simpler concepts will have to be added to the hierarchy so that we can use those to describe the concepts that will be used to describe to hard ones. And if they’re still not simple enough? Then we add another layer of concepts! You get it now, right? The “deep” part in deep learning stands for the depth of the hierarchy of concepts, which (please, believe me) can get seriously deep!

How was deep learning born?

Contrary to popular belief, deep learning is not a particularly new field of study. In fact, it was born in the 1940s, but under another name: cybernetics. At this time researchers were motivated by neuroscience, which means that they were trying to build models that could emulate the biological brain (yes, not only the human brain). As a result, another one of the names that deep learning has gone by is “artificial neural networks”. It was at this time that the most basic brain function-based models were built, which were intended to emulate the action of a single neuron.

Of course, the approach of emulating a biological brain by working with individual neurons was too simple and had many limitations, so by the end of the 1960s, there was a major dip in the popularity of neural networks.

In the 1980s deep learning (or neural networks) became popular again, this time under the name of connectionism. Connectionism arose with cognitive scientists, researchers interested in studying the mind, combining multiple levels on analysis. The central idea of connectionism is that a large number of simple units (neurons) networked together, can achieve intelligent behavior. By the mid-1990s some unrealistic claims about what neural networks arose, leading to unreasonable expectations. Of course, these expectations were not fulfilled, what ended in another dip in the popularity of neural networks.

It was not until 2006 when the third wave of neural network research began. Some people argue that one of the reasons for the re-appearance is that it was only at this time that the technology was advanced enough to train such complex models. Also, it was at this time that the use of the term “deep learning” was popularized, to emphasize that now researchers could work with deeper networks than had been possible before. It was also the beginning of the success of the deep learning-based models, which started to outperform competing AI systems based on another machine learning technology! Nowadays we’re still in the third wave of popularity of neural networks, and truth be told, it doesn’t look like this popularity is going to fade any time soon.

Deep learning and computer vision

Computer vision was one of the fields that took advantage of the increasing popularity of deep learning. In fact, a big dramatic moment for computer vision was in 2012 and it was due to deep learning. It was that year that Alex Krizhevsky and his team won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), by presenting a deep learning model capable of recognizing objects.

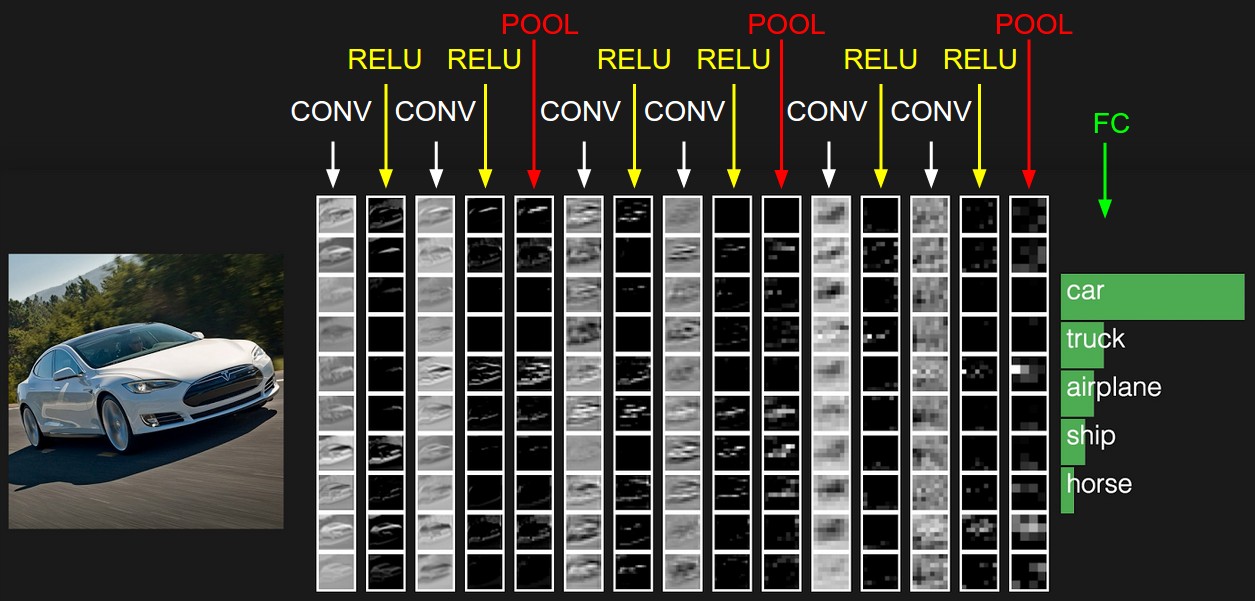

Ever since then deep learning appeared as a solution to many of the problems of computer vision, whether it be object detection, object segmentation, image captioning, among others. And most of the deep learning-based solutions come by the hand of convolutional neural networks (CNNs), but that’s something we’ll save for the next article!

Image of a convolutional neural network used from object recognition. The image shows how the network recognizes that the image corresponds to a car. Image taken from the CS231n course at Stanford University